Services and Solutions

Our Services

The challenge

Most organisations expected the business framework to be static when they had a thought of having been in the nascent stage of designing their enterprise database. In the journey towards digital maturity, it is crucial to building a dynamic framework of the enterprise data ecosystem and creating a central data collection hub. Traditional data models served as the foundation of information for businesses. However, the new technologies required the incumbent platforms to be reengineered to meet the growing needs of scalability, constant availability and performance. The traditional frameworks are unable to link their risk programmes with relevant customer data while integrating the data sourced from several sources.

Businesses now know that it is vital to analyse the structured data to effectively analyse risk and monitor the critical control points while adhering to the agreed SLAs. It is also challenging to assess the quality and accuracy of data and often challenging to identify the correct data sources. It is essential to identify and procure external data continuously. As an outcome of this process, organisations must integrate analytics across the various workflows of their operations to measure and analyse the data warehouse and come up with KPI-based reports.

How Neural Data can help?

Organisations need to have a robust data ecosystem covering a disparate set of input data. This is where Neural Data comes to your rescue. Neural Data is a preferred Oracle Business Intelligence and OCDM Implementation Partner. We have extensive experience in Artificial Intelligence and Machine Language modelling. We have handled more than 2 TeraBytes of data every day while being integrated with 22+ operations data sources. Our team had helped the different departments of the client through multi-stage analysis of user data from the rich OCDM data warehouse.

We provide next generation software services using a robust Business Intelligence framework ably aided by Artificial Intelligence modelling algorithms. Our robust quality processes use continuous process improvements using General ledger based reconciliation and using a self-service capability on your organisation-wide data lake.

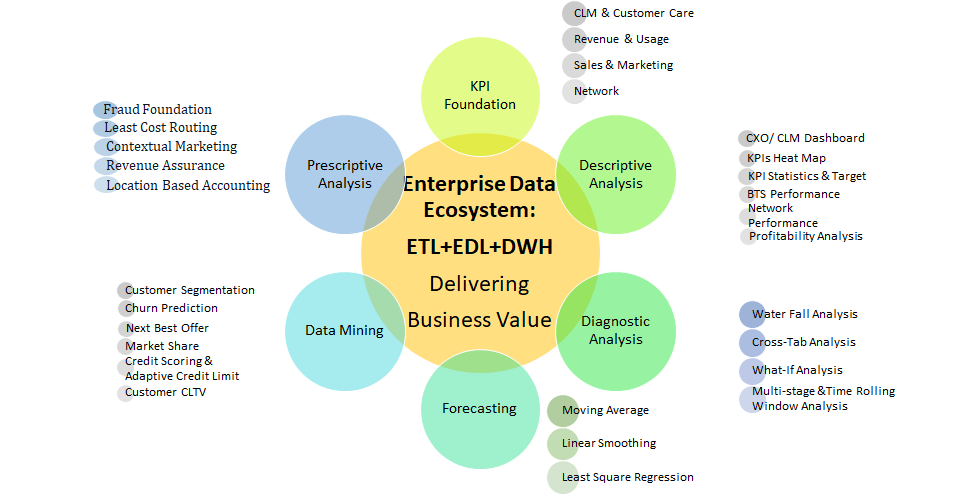

KPI Foundation

It is essential to know the data points that are the sources of the disparate data sets, as they form the core elements of any value chain. It is through these data inputs that we must have the KPI foundation in place. It will help businesses to organisations to foresee trends in their operations and tweak their business strategies to bridge any existing gap through 360 degree analytics of the current scenario.

Neural Data uses the years of experience of its consultants to come up with data classification and standardisation exercise that helps to lay the foundation of finalising robust KPIs to assess the current and future business potential of organisations. Our consultants also apply administrative and technical controls based on the business criticality while ensuring the protection of classified data.

Descriptive Analysis

Once the data is collected from the disparate sources, the systems must facilitate statistical monitoring of the incoming information. Statistical calculations must be undertaken continuously to allow the consultants to utilise predictive analysis for further analytics.

Having utilised this activity of descriptive analytics in past projects, our consultants utilise their experience across several installations and come up with the requisite data aggregation techniques to report recent events. The analytics are in a predetermined that allows senior management personnel to have real-time insights into the business and take mature decisions on future.

Diagnostics Analytics

Once you have the descriptive analytics in place, it becomes important to diagnose the reasons for the occurrence of any event. Or the chances of the occurrence of any event. You must utilise drill-down techniques into the data warehouse along with correlating the historical data using advanced data mining and business intelligence functionalities. Understandably, a business must have a customised business analytics model to solve complex issues.

Our experts utilise their prior experience in several projects to devise a proper correlation mechanism from the business intelligence reports and pinpoint the issues affecting the business. Over time, the data is fed to an artificial intelligence model that can predict the possible occurrence of an event and notify the client to take the necessary steps beforehand.

Prescriptive Analysis

What is the use of having a data warehouse without having the means of churning the information into useful data? Once you use sophisticated data mining and prescriptive analysis techniques, you can extract information through the standard processes of pattern recognition and offer the best solution among a myriad of choices.

Neural Data is capable of extracting large volumes of data and undertaking data integration and enrichment along with subsequent high-velocity computing of such data. Our consultants have experience in similar global projects utilising Artificial Intelligence and Machine Learning models to make predictions by comprehending future results ahead of time. In the process, our consultants create an Event Data Lake platform that can help in the advanced computation of data using different analytic services for every use case.

The Challenge

Businesses must understand that their progress towards digitalisation is an ongoing process. It requires dealing with a massive amount of data in real-time. However, more often, you will find the data being read in batches that reduces the very essence of the activity. Apart from this, security and data privacy are among the other issues faced by consultants. While automation is the order of the day, utilising Artificial Intelligence processes can help you address some of the data related challenges. However, Data Engineers spend most of the time getting the data in a usable format as it is the prime necessity to apply the relevant Big Data models.

How Neural Data can help

Now, studies also show that at least 70% of data projects fail. It is because of the need for effective data integration. There is a need to collect and integrate the massive amount of data into Artificial Intelligence enabled architecture. It is also important to channel the gained knowledge and make the downstream applications take advantage of this knowledge. Neural Data has over 200 person-years of global experience in handling complex data enrichment while handling high volume and velocity computing with ease. Our engineers also overcome data delivery challenges to down applications and adhere to the preset SLAs.

Most consultants fail to create the model when they are faced with a deluge of millions of data due to memory restrictions. However, the experts from Neural Data run sophisticated algorithms that allow them to run these models with very high accuracy levels. Our robust data engineering capability, values and commitment have made us unique and a trusted partner in delivering thriving enterprise data ecosystem by integrating a vast amount of data into Business Intelligent Architecture. Our framework also has 800+ KPI-based reports that are pre-built in the model based on use cases for our prior experience.

Development of Artificial Intelligence Data Models

The Data Engineers must have a good sense of the data, visualise it in two- or three-dimensions and run a few statistics to understand the data better. When there is no perfect model, and engineers must deal with trade-offs. This is where experts from Neural Data is required to help businesses understand the pros and cons of each such trade-off and take intelligent decisions which model to adopt. Neural Data brings with it global project execution and best practices along with a robust Business Intelligence framework and strategy. We engage in critical process improvements involving data standardisation using optimum hardware, software and human resources.

One of such models used by our engineers is the Digital twin technology that requires us to build a digital replica of the real-world systems if the organisation. It is critical to creating a simulation of the various stakeholders of the business, viz. customer, partner, process, equipment, etc. that lead to a holistic view of the entire company. To realise the exact digital twin, consultants must map the behavioural aspects, the lifecycle of the stakeholders along with the lifetime value to have an actual digital twin of the business. The model must be updated continuously in real-time to ensure accuracy and effectiveness.

Development of Machine Learning Data Models

Machine Learning models have extensive possibilities to improve the productivity of an organisation and maximise its revenues. However, project teams need to select the data model that is ideal for any particular scenario. It is because some algorithms are better at solving a specific problem than others. They are in use to send mission-critical KPI-based reports to downstream applications periodically. One of them is the need for helping in context-based marketing activities and in helping managing fraud. Our Artificial Intelligence-based have seven in-built Machine Language models that allow senior management in improved decision making.

Our engineers develop predictive models using improved data analysis from different inputs. We convert raw legacy data into predictive engines that help in better data analytics. The sophisticated Machine Learning models understand the scenarios and help in recognising trends and making accurate predictions. Our algorithms are scalable to handle huge volumes of data, and we have been processing more than 2 TeraBytes of data in a day at some of our client sites.

Context-based triggers & Real-time Analytics

Businesses need to analyse the disparate data systems on an ongoing and churn useful information to help senior management in the decision-making process. As an example, the staging layer of the Business Intelligence model at a telco receives information from a host of network elements along with customer data. It is essential to set up several triggers as control points that will help in assessing the incoming data.

Some of the applicable triggers could be the following:

- Last N Event Triggers

- Twin Lifetime Value Triggers

- Twin Lifecycle Triggers

- Artificial Intelligence and Machine Language-based triggers

- Enterprise based triggers (B2B/ B2C)

Based on these triggers, the model can undertake real-time analytics and suggest the next course of action. It could be a subtle difference, like presenting viral or peer-to-peer marketing. The model can also recommend upselling or cross-sell solutions to different prospects.

The challenge

Organisations have been facing an explosion of data from various sources. It is essential to sift through this voluminous data to bring out information that can help to change the course of the business. The diversity of data makes it crucial to bring out the necessary information related to customer behaviour, support activities and business prospects. It is essential to channelise this large volume of data and bring out meaningful reports and formulate KPIs and control points that will allow the senior management to take important decisions about the growth of the company.

How Neural Data can help

The insights can help in formulating better customer experience and engagement by learning about their behavioural patterns. It is crucial to have a dedicated solutions partner who can act as a catalyst in the transformation and provide business insights that can lead to future products and automated workflows across the organisation. Neural Data has an incumbent business intelligence framework that starts by outlining how the required data will be obtained from the various data sources and processed before forwarding to downstream applications.

Our ISO-certified processes allow organisations to convert data into assets to further the chances of moving into other lines of business or tweaking business strategies. Our managed services help in managing organisation-wide risks by integrating data from multiple sources and using prescriptive analytics to come up with a holistic view of the data through reports and dashboards.

Managing the Enterprise Datawarehouse

Businesses receive information from several touchpoints, and it is essential to process them to gather meaningful inputs to tweak business strategies in future. The cutting edge technologies can allow organisations to transform the customer experience and improve customer lifetime value through the effective proposition of new services or improving the customer service experience.

Our OCDM experts formulate the ETL workflows that can facilitate the population of the data warehouses by pulling the data from the disparate sources. The schedule of the ETL is set up after a discussion with the client. We utilise use cases from earlier installations as accelerators to help us formulate the workflows that are critical for your business. The challenge can be handled by developing robust business intelligence frameworks that can refer to input data from several touchpoints and converting them into KPI-based reports. Companies need to devise new ways to maintain a technological edge and have an experienced partner to manage the enterprise data warehouse.

We bring to the table our robust data engineering capabilities and global best practices that have been deployed globally at our esteemed clients. Our robust business intelligence frameworks are capable of integrating with over 22 data sources that generate up to 2 TeraBytes of data every day. Our solutions have allowed our clients to identify customer behaviour better and implement strategies to retain them while also tweaking existing systems to gain new ones too.

The business receives a range of both unstructured and structured data from a variety of disparate sources. Our data scientists utilise a robust Artificial Intelligence framework built by combining the best practices from prior implementations globally. The obsolete data is purged from the system by using advanced analytics techniques.

Neural Data has helped its clients in the delivery of 800+ location-based KPIs that were built on a self-service capability across the entire data warehouse. We have been ranked among the best Oracle OCDB implementers and have managed large data warehouses through the implementation of a robust framework and a comprehensive data ecosystem.

Data Reconciliation

Our software experts utilise a business intelligence framework that ensures fail-proof data integrity checks at several levels. Our processes ensure that the target data is compared against any Transformed source data to ensure the data transformation exercise has been performed correctly. It starts with the ETL process before loading the data into the system. We utilise dashboards that inform us continuously in assessing data quality and applying rules to clean data. The ongoing reconciliation of data ensures consistency of data and ensures that the relevant personnel are informed in case of any mismatch without fail.

Once the data is received in the appropriate format in the data lake, it is vital to check for missing data. Neural Data utilises global best practices that involve two levels of data reconciliation to verify the data and to ensure the trustworthiness and completeness of the data. Our reconciliation model consists of a file-level and a subsequent day-level checking that reconciles the processed records with the files received.

Data Delivery

An ideal data delivery platform must have a flexible architecture and is critical for the analytics value chain and deriving value by churning customer data to create meaningful reports. The consistent data must be delivered to several downstream applications and stakeholders in the entire value system. Our framework allows us to replicate the data easily while able to segregate critical business events.

It is critical to pinpoint any errors in the data after transformation. As a result, the target and the source data are compared to prevent such mismatch. The requirement for high-quality data can be met by devising proper assessment processes to ensure the correctness of the metadata, uniqueness of the target data and the correctness of the data aggregation.

In the era of shrinking IT budgets, businesses must chalk out a cost-effective path to reach their objectives. Delivering the predetermined set of reports to the downstream applications can enhance the value to the company. It will help to measure the selected control points against the threshold KPIs. Neural Data has been instrumental in off-the-shelf delivery of over 800 location-based KPI reports.

The challenge

Businesses must mine the data they have at their disposal and bring out KPI-based reports to tweak their business models to face the ever-changing business scenario. Companies face issues in gathering meaningful insights about the 360-degree view of their business. It is essential to deliver Artificial Intelligence-based use cases based on global best practices but developing a data model is fraught with severe issues.

First of all, the internal team is ill-equipped and not updated with the latest technologies and best practices in Business Intelligence and Data Warehousing. The Data Models being deployed at various companies were not built using specific use cases relevant to the business model of the company. Neural Data has come up with an analytical data model that is based on the Artificial Intelligence-based Data Model of the TeleManagement Forum. We understand that despite OCDM having reached the end of life, several businesses would prefer to continue with it for a few years more.

The reasons for staying put with OCDM are too many. It is pre-optimised with a foundation layer, analytical layer and a visualisation layer. The data model can be fed with various data types while there were a few pre-built intra-ETL processes already in place. The data model is simple to use and leverages only the data that is useful to create KPI-based reports. It is also flexible that can be altered as per the needs.

How can Neural Data Help?

Neural Data is an official OCDM implementer since 2015 and its senior management has over 200 person-years of experience in implementing Business Intelligence solutions successfully at several clients across the globe. You can deploy our senior resources directly into your team as consultants to help your managed services partner to carry out their services diligently.

Scenario-based use cases

We have created 50 use cases based on our experiences in deploying OCDM successfully at several clients. It allows us to mine the critical data for you effectively and undertake three layers of analysis and creating KPI-based reports in real-time and forwarding them to senior management periodically. It allows you to make full use of computing KPIs, analysing the data in real-time and forecasting the KPIs correctly.

Use of prescriptive analysis

Our experts use prescriptive analysis models that cover regular feedback of predetermined reports based on set KPI-based control points. Given our prior experience across multiple clients, the delivery of the first three layers is foolproof that ensures the selected model will work for the use cases decided for your business.

Simplified data models

Our data models consider the latest TeleManagement Forum specifications and are simplified to utilise the data that can be used to extract meaningful reports. Moreover, the use cases you run are selected based on your business model and those that do not fit are removed from the model. We can also allow you to customise the model and add specific use cases that are required by your business.

Saves resources

Neural Data uses techniques that help our clients save a lot on software and hardware resources. Our clients save finances in database licenses by offloading the data warehouse while enhancing the accessibility and performance of the data models. Our Business Intelligence frameworks can be implemented using any RDBMS. We do not believe in patronising any single database, though we are masters in Oracle. Moreover, you also save on the Oracle support cost by receiving world-class support at only a fraction of the cost.

REVENUE ASSURANCE

Given the considerable investment involved in laying own the infrastructure, it is critical for organisations to prevent fraud and leakage of revenue as much as possible. The organisational assets must be monitored and controlled in real-time. For a telecom operator, it encompasses the entire eTOM model, covering the whole value chain involving the vendors, human resources, purchase, internal assets, the sales and distribution channels and the retailer network. The significant chunk of revenue leakage can occur through the billing system and the interconnect system. It becomes essential for the telco to minimise revenue leakage by deploying useful control points at relevant areas in the entire value chain to send ahead-of-time alerts to authorised personnel.

Most businesses have a complex business model that has very little correlation with the operations and the finance departments. It is critical to ensure that the journal entries posted in the ERP and the Billing system of the business match, as it will help to send out accurate reports to the government authorities. Consultants must handhold their clients in this activity, but rarely are they able to sync both these functions. However, it is crucial to post the general ledger correctly and preventing any mismatch.

The subject matter experts from Neural Data use advanced models that allow both the operations data and the General ledger of the finance department to be in sync with each other. We have a homegrown data model that helps us in our mission to minimise the risk of penal action for our clients. It uses an incumbent unbiased technology coupled with the domain knowledge of our consultants. This requires a deep understanding of the underlying business principles and impact the targeted outcome through the accuracy of the model used.

Our revenue assurance program is fully interfaced with the analytical data model to manage financial audit, revenue & payment verification and reconciliation across the revenue chain as per country-specific accounting practice and financial regulatory requirements. We are capable of delivering financial and accounting reports, viz. Balance Sheet, General Ledger, Trial Balance, Profit & Loss, Journal Book, Subscriber Ledger, TAX/ VAT Statement, Financial regulatory reports. The team also aids in revenue reconciliation against the incumbent ERP solution.